AGI is a remarkable new technology that enables computers to think like humans.

AGI is fundamentally different from today’s AI, but already very familiar to us from decades of sci-fi. Think C-3PO from Star Wars or Sonny from I, Robot — systems that can genuinely experience, learn and adapt to the world around them.

The invention of AGI will be the biggest technology revolution since the invention of the internet. AGI systems powered to 10x the capacity of humans will be able to solve problems and achieve scientific breakthroughs that we’ve not been capable of, in a very short space of time — curing disease and expanding life expectancy, ultimately improving quality of life for all humans. AGI systems at 0.1x human capacity will be put to work in their thousands, in factories, warehouses and on construction sites, autonomously working without human intervention or specific programming.

They’ll transform our infrastructure by bringing live human-like intelligence to everything from elevator routing to power grid management, optimizing systems that today run on manual logic and preset parameters.

It will radically transform society, causing us to re-evaluate areas like education - how we learn, and what we learn. More importantly, it will change how we think about work and financial freedom.

This vision is familiar from sci-fi, but distant from the innovations we have today. We’re not close to it right now, but I believe we can build this — there are 8 billion proof points walking around that this kind of intelligence is possible. We can all see that current versions of AI are useful, but not ready to meet the expectations of full autonomy. I believe this is because they’re building on the wrong foundation, the wrong architecture.

What’s wrong with the foundation?

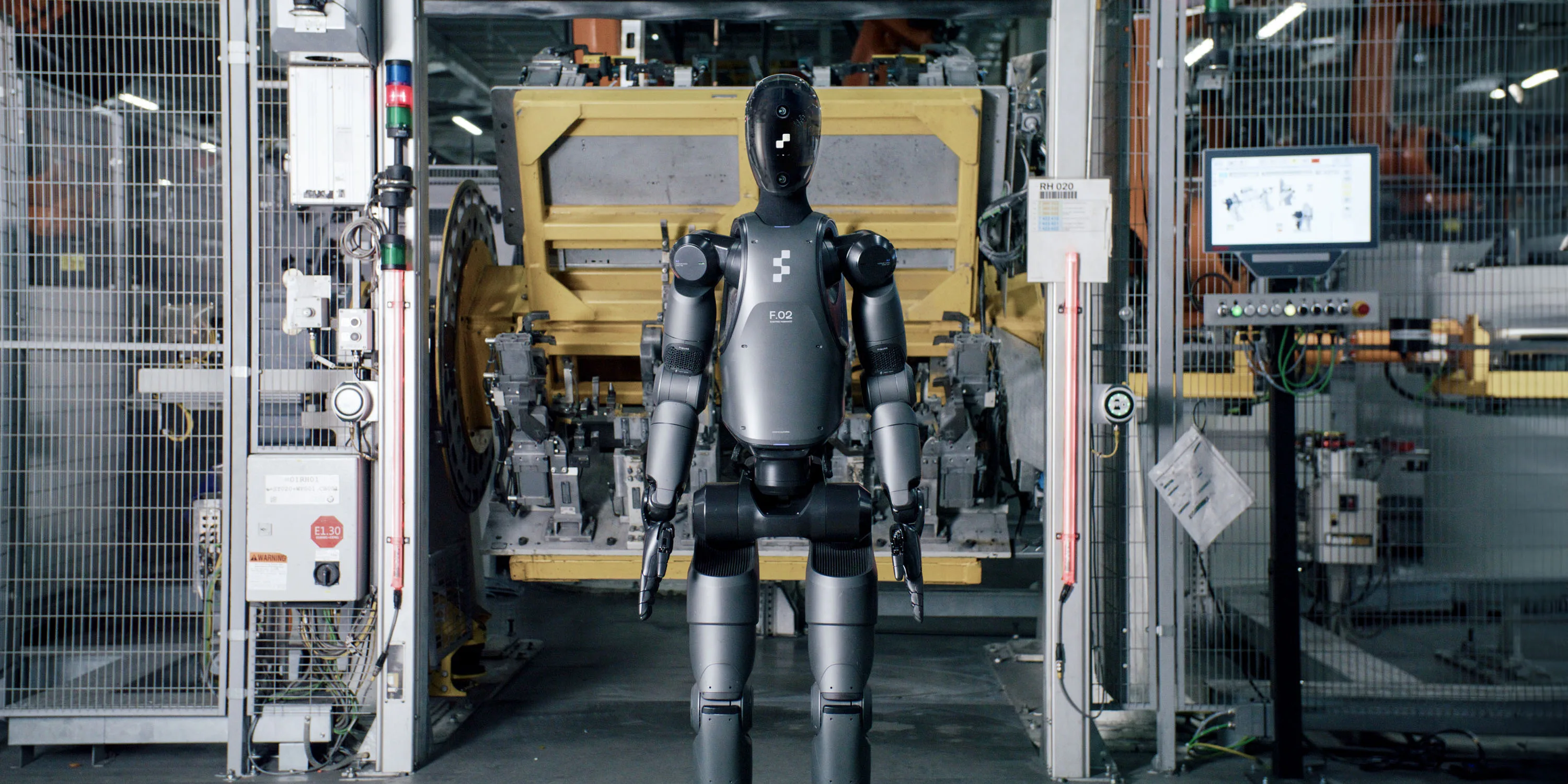

Figure are building humanoid robots with very impressive, futuristic hardware. They look incredible, but they’re limited by their AI software. With millions of hours of training data, they can only “pick and place” items in a controlled environment.

Linear Static Models

AI has been through two major transitions over the past decade. Both times, the limitations of the systems have been clear.

The first modern AI systems became popular around 2015. With new innovations like Computer Vision, they started to get really good at solving well-defined problems. For some basic background on their design: A Machine Learning model has inputs, processing layers (known as “hidden layers”), and outputs. For example, if the problem was “identify cats vs dogs”, the input could be an image of a cat, and every neuron in each layer would activate and process the information, passing their findings to every neuron in the next layer. To start with, the model would have a 50% success rate, by random chance. But then through a training process involving millions of images with feedback on every prediction, the model could tweak its values and reach near 100% accuracy at sorting pictures of cats from dogs.

Google provide an interactive playground to show how this works.

These models became popular around 2015, but have two fundamental limitations: they require millions of training examples, and can only solve the specific problem they’re trained for. They aren’t able to generalize or learn new things beyond their specific training.

Despite that, the argument has always been the same: with more complex models (more hidden layers and neurons), and more training data, these models will be able to generalise and solve any problem better than a human.

Tesla

In 2016, Tesla began selling the Full Self-Driving (FSD) package for $3000. With a future software update powered by cameras and Computer Vision, this upgrade would enable their cars to drive themselves autonomously from A to B. They’d be able to replace a billion human drivers on the road.

The first versions of “Autopilot” arrived quickly, helping the car to stay within lane markings and perform basic maneuvers. But while the price of pre-ordering FSD rose to $15k, the timeline for delivery moved further away, and the answers on how exactly this would be achieved, seemed unclear. Instead of radical progress, there’s been incremental progress, never quite reaching what was promised.

In 2016, Musk said “I would consider autonomous driving to be basically a solved problem. We’re less than two years away from complete autonomy.” In 2017, “We should be able to drive from California to New York later this year.” In 2018, “We expect to be feature-complete in self driving by this year.” In 2019, “I feel very confident predicting autonomous robotaxis next year.”

Tesla’s claims about FSD is the driving force behind their share price, and the reason that Elon Musk is the wealthiest person in the world.

In 2024, after 8 years of development and billions of kilometers worth of training data, it remains at Level 2 autonomy: “vehicles that can perform steering and acceleration under certain conditions but still require human oversight.” It still cannot pass a basic driving test. If these AI systems were truly capable of generalising, and could achieve autonomous driving, they would have done it by now. As of 2024, Elon claims FSD will arrive “next year”.

Attention Is All You Need

The most recent major development in AI is the Transformer architecture, which paved the way for Large Language Models (LLM) like ChatGPT. It was invented by a small team of researchers at Google, who were actually just trying to improve Google Translate, and ended up inventing one of the most significant technology breakthroughs of the decade.

It’s based on the same architecture as Computer Vision — an input, a series of hidden layers, and an output. But the way it works is a bit more complex, designed to be very effective at processing sequences of data. Every time you ask ChatGPT a question, it processes all of your text, passes it through its hidden layers, decides a probability for every single word it could respond with, and then picks a word high on the list, and sends it back to you. Then it processes all of your text again, plus its own response so far, ranks every possible word again, and picks the next word. And so on, until it’s completed a response.

ChatGPT is incredibly useful, but this technology has a few fundamental limitations:

- They require massive amounts of training data — the entirety of content on the internet — and they take months to train on the most powerful computers.

- They hallucinate, generating nonsense information. Of course, next word prediction doesn’t guarantee truth.

- They aren’t able to generate novel ideas, or come up with something new. They’re simply replicating patterns they’ve observed in all content they’ve been trained on.

- They require massive amounts of computing resource to generate a response, word by word. iPhones are pretty powerful these days, but the new version of Siri with Apple Intelligence only performs the basics on the phone, otherwise outsourcing to giant Apple servers which can handle the computation.

Imagine applying this to autonomous cars or robots, where every single action taken requires incredible amounts of computation. Or trying to run a factory of thousands of autonomous robots, each requiring massive compute even to operate at a basic level.

Not to diminish this breakthrough — there’s likely decades-worth of practical applications ahead. But it’s not going to overcome these limitations, and it’s not the path to AGI.

The reality is that LLMs, no matter how refined, cannot achieve true AGI through incremental improvements. They’ll get better at what they do - generating more coherent text, fewer six-fingered hands in AI art, longer form videos - but these are just optimizations of the same core technology. Everything they produce is a remix of their training data.

We’ve seen the same pattern happen twice now. We understand the limitations of the technology from the start. And then spend years loosely predicting that it will overcome them “with scale”. But the problem is not the amount of training data or neurons, it’s the architecture.

Path To AGI

I guarantee that while reading this you haven’t once thought about a kangaroo, or Australia, or the concept of a country. Our brains don’t activate every neuron or call on the millions of things we know about, for every action we take. AI neural networks are different — when you send a message to ChatGPT, it will activate every neuron in its model, and score your message against every single word it’s ever processed to determine what comes next. “The cat sat on the…” (mat 0.99%, kangaroo 0.00001%).

For contrast, think of a mouse - an animal with a tiny brain and limited intelligence. While it can’t match humans or ChatGPT at intellectual tasks (it’s a mouse), it can do something ChatGPT cannot: learn new concepts and apply them to solve novel problems. This is the key difference in how intelligence scales. In nature, larger brains generally correlate with higher intelligence. But AI doesn’t scale the same way - it’s not progressing from mouse-level to human-level intelligence, it’s just getting better at mimicking human responses. If you train ChatGPT at 10% capacity, it’s not “as smart as a mouse” - it’s just producing lower-quality text while lacking the basic problem-solving abilities that even a mouse possesses. Similarly, training it 10x more wouldn’t make it superhuman - it would just get better at imitating human responses. Achieving AGI isn’t just about increasing scale - we need a fundamentally different architecture that better mirrors how biological brains actually work.

The problem is that modern neural networks have this static, linear architecture. When a developer is building a new model, they decide how many hidden layers, how many neurons in each layer, what type of layers etc.. And then they start training, with every input passing methodically through the model, touching every neuron, and then at the end ranking the probability that the input matches every known concept. This is very different to how the brain works.

The human brain has 86 billion neurons, each with 10,000 synapses (connections) to other neurons. When input is passed in, it travels through this web of neurons, activating relevant connections until it has determined a result. As the brain interacts with the world, it is constantly evolving its structure to learn new information — changing its own architecture, which neurons are connected and will be activated the next time it sees a similar input. This is completely different to a static, linear model, where the number of neurons and the connections remain the same, and the organisation is linear, moving forward methodically through the network. Rather than the free-flowing activity of a brain.

The brain is able to build context. Rather than merely recognising “this is a cat”, it’s associating thousands of concepts and features (it is a certain size, it has fur, it has four legs, it has a face with these features etc.) to make that determination. If a young child sees a horse for the first time, they immediately can use their knowledge of cats and dogs to understand that a horse is also a 4-legged animal, much larger, and with a longer face. (A ML model would need to be re-trained on a million photos of horses.)

Building AGI

If I had to predict what an AGI system could look like:

- They will be structured as a web of partially-connected neurons, rather than a series of fully-connected linear layers.

- Their structure will be dynamic, the connections will evolve in response to new input.

- The process of evolution will be finely tuned through the system’s “DNA”.

- The output/conclusion from one set of neurons will be passed back up the chain. In other words, it’s processing information dynamically and non-linearly.

Building AI that works this way will be very difficult at first. The brain is finely tuned through DNA, which makes it effective at connecting concepts, learning, interacting with the world, efficiently storing and retrieving memories etc..

It’ll be important to start small, maybe at the intelligence of a mouse (71 million neurons). The first systems will demonstrate a basic ability to interact with the world and learn simple concepts. Then, we can scale these systems up to the intelligence of a cat (250m neurons), then a monkey (1.3bn neurons), then a human (86bn)… then 10x a human.

A fundamental feature of these systems is that we can scale them to meet our needs. Most AGI systems won’t be spec’d anywhere near human-level intelligence, because they don’t need to be. Thousands of autonomous workers on a Martian construction site can go about their work very effectively, with mouse-level intelligence.

AGI also brings new opportunity to go beyond human capabilities. Humans can only learn from our own experience. Every new human born has to start again building their knowledge, and go through decades of learning and growth. AGI systems will be connected to the internet, and able to share world knowledge with each other far more efficiently than the slow learning process of a human. Imagine how quickly you could learn to drive if you had 1 million versions of you all learning at the same time, in different environments, and feeding back the knowledge instantly. Memories could be shared too. Humans have developed analogue and digital ways (stories, photos, videos) to share memories, but AGI systems could do this far more efficiently, and more accurately.

There’s massive potential for very very capable AGI systems: higher brain power than a human, syncronised learning and shared knowledge.

Who Will Build AGI

I think AGI will be invented by a small team of researchers working in an opposing direction to where the big AI companies are piling their resources.

The person most aligned with my thinking is John Carmack (known for developing Doom, Quake and Oculus), who started a small research lab called Keen Technologies, to develop AGI. Here’s some of what he said during his interview on Lex Fridman:

Everything John Carmack says in this video, and in this interview: 🧡

I’d recommend watching the whole thing.

The Future

While LLMs are very impressive and useful, the gap between what we have now and what we could build is still vast. The path to AGI would be better served if there was more interest in research paths towards that, rather than a misplaced hope that incremental progress on LLMs will somehow overcome their limitations.

I’d like to see more conversation in the AI space around genuine AGI advancements. Every time I read down one of the many AI newsletters I subscribe to, I’m searching for AGI goodness. I can’t think of a better scenario than a healthy split between advancing LLMs and fresh research paths towards AGI.

What more to say. I’m excited for the day when we start to see AI systems built closer to how intelligence actually works.