It’s an exciting time in robotics. The robots we’ve dreamed about for decades, seen only in sci-fi, are now finally arriving. But while robot hardware is becoming sophisticated, the intelligence remains primitive, lagging at least 10 years behind. The current models are struggling to generalise, and the leading labs are being forced to pivot from shipping with autonomy, to relying on tele-operation — a huge setback in their plans.

I believe the problem is not with a lack of data, but the underlying architecture. It’ll take a new approach to intelligence for robots to understand the world. This type of problem is familiar to me. At my last startup, we developed the first-and-only working solution for indoor navigation, with a breakthrough new approach using the same SLAM, LiDAR and localisation technologies core to robotics.

In 2026, I’m starting i10e, a robot intelligence research lab. The name is a numeronym for “intelligence”. i10e’s mission is to build a new architecture for intelligence, inspired by the brain. If successful, this will enable robots to generalise as easily as humans, making them useful and commercially viable.

Where the industry is today

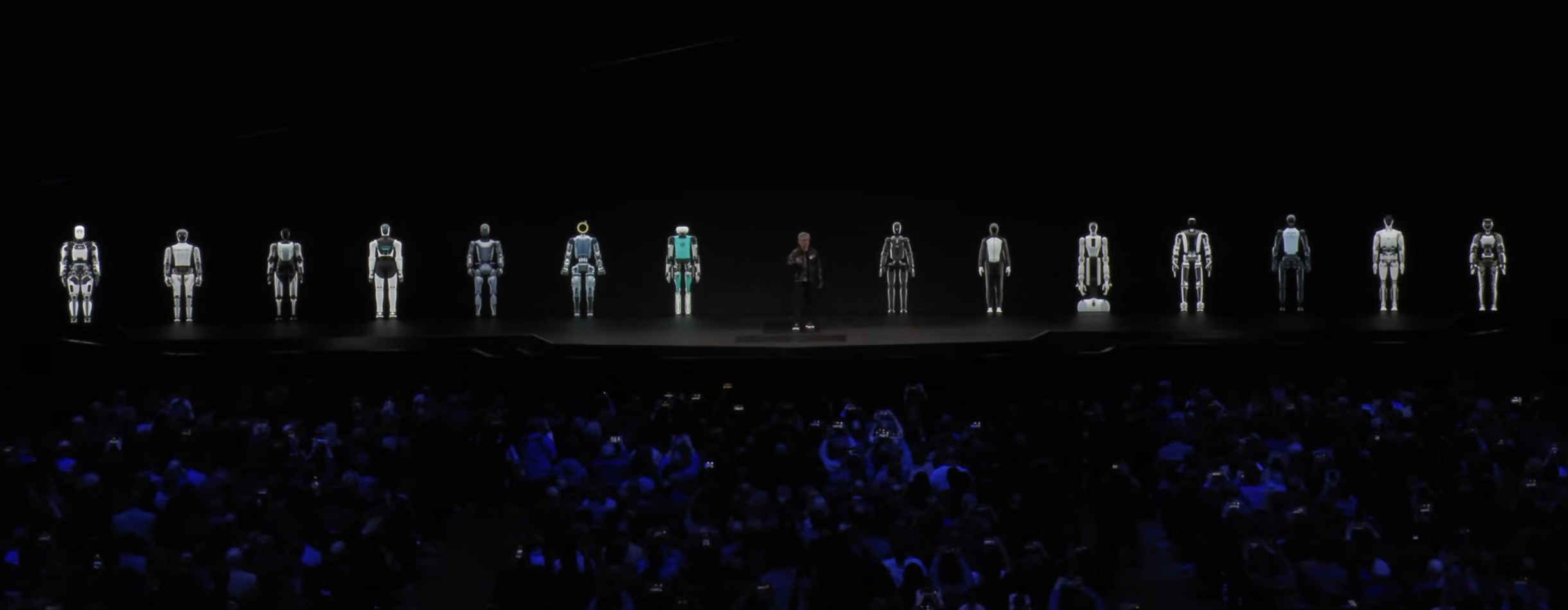

NVIDIA CEO Jensen Huang Keynote at CES 2025

NVIDIA CEO Jensen Huang Keynote at CES 2025

Last year, I travelled around the world and met with many robotics founders and teams. Here’s what I’ve learned about the space.

Marketing demos are often misleading. 1X recently began taking pre-orders for their home humanoid Neo, said to be shipping later this year. When I met their CEO at their Palo Alto HQ for a tour last summer, they gave a hand-wavey explanation for why they wouldn’t be able to show me anything autonomous (it would be a lot of work to setup a unit). A few months later, WSJ uncovered that the robot would be shipping tele-operated at first, and autonomy was said to arrive later. This was strikingly different to their previous marketing, but they’re not the only ones.

Tesla creates the impression of autonomy at their events, while actually their robot is tele-operated by a person in the next room. Figure’s robot is only ever demonstrated under the tightest of controlled conditions. And so on.

Quoting Chris Paxton, a long-time industry analyst and insider:

The general rule when watching any robot video is “it can do exactly what you see it do, and literally nothing else”

Everyone is taking the same technical approach, but nobody has a lead. The algorithms and methods used are the same across the industry: Vision-Language-Action models trained on tele-operation and sim data. Progress is incremental, with capabilities still limited to solving narrow tasks in controlled environments. The demos we see of robots making coffee or folding clothes require hundreds of hours of training data for each of those tasks, and are very sensitive to changes in the environment, like different lighting. Researchers believe massive data collection will lead to some level of generalisation, but so far this has not worked due to how complex the real world is.

The whole industry has been caught off-guard as hardware outpaces intelligence, severely limiting their commercialisation until they can solve this problem. As more companies build better hardware, this problem will only intensify.

I believe an intelligence breakthrough is required for these robots to become useful and commercially valuable. I believe this breakthrough is in a new architecture, inspired by the brain. Even low-intelligence animals like mice are able to understand the world, and quickly adapt to problems they haven’t seen before – whereas a highly-trained AI model cannot do this. The key architectural differences are that LLMs are linear and fully-connected static functions, calling on every neuron for every new token. Whereas a brain is non-linear, sparsely connected and dynamic, only calling on neurons when relevant, and constantly changing its structure as we experience new things. We’re able to learn new things without forgetting other important things, and we can adapt existing knowledge to understand new things quickly.

My first major goal with i10e is to build a new intelligence architecture, and to demonstrate this working at a small scale, matching the capabilities of a mouse. I believe this new architecture is the breakthrough we need to enable robots which can understand the world, adapt existing knowledge, and learn from experience.

Why I’ve decided to work on this

There are many smart researchers in AI and robotics, but almost everyone is focussed on a narrow band of work around transformer architecture. This is a wide search space, and I’d personally love to see more varied competition.

I’ve spent my career approaching long-standing technical problems from first principles, to achieve a breakthrough.

At my last startup, Hyper, I lead a research lab to build a breakthrough solution for precise indoor location, a problem that had eluded researchers for decades. Our technology fused AR SLAM and LiDAR with WiFi signals, to eliminate noise and drift. The results amazed customers and it led to a global rollout with IKEA.

Before that, I built an open-source technology to combine GPS with AR, with smart algorithms to enable real-world overlays, like street directions and points of interest. This is the largest open-source project for Apple’s AR and Location frameworks, and is embedded within Google Maps.

Robot intelligence is another area where I have a clear technical vision, and I’m excited to bring my experience to this problem.

The Opportunity

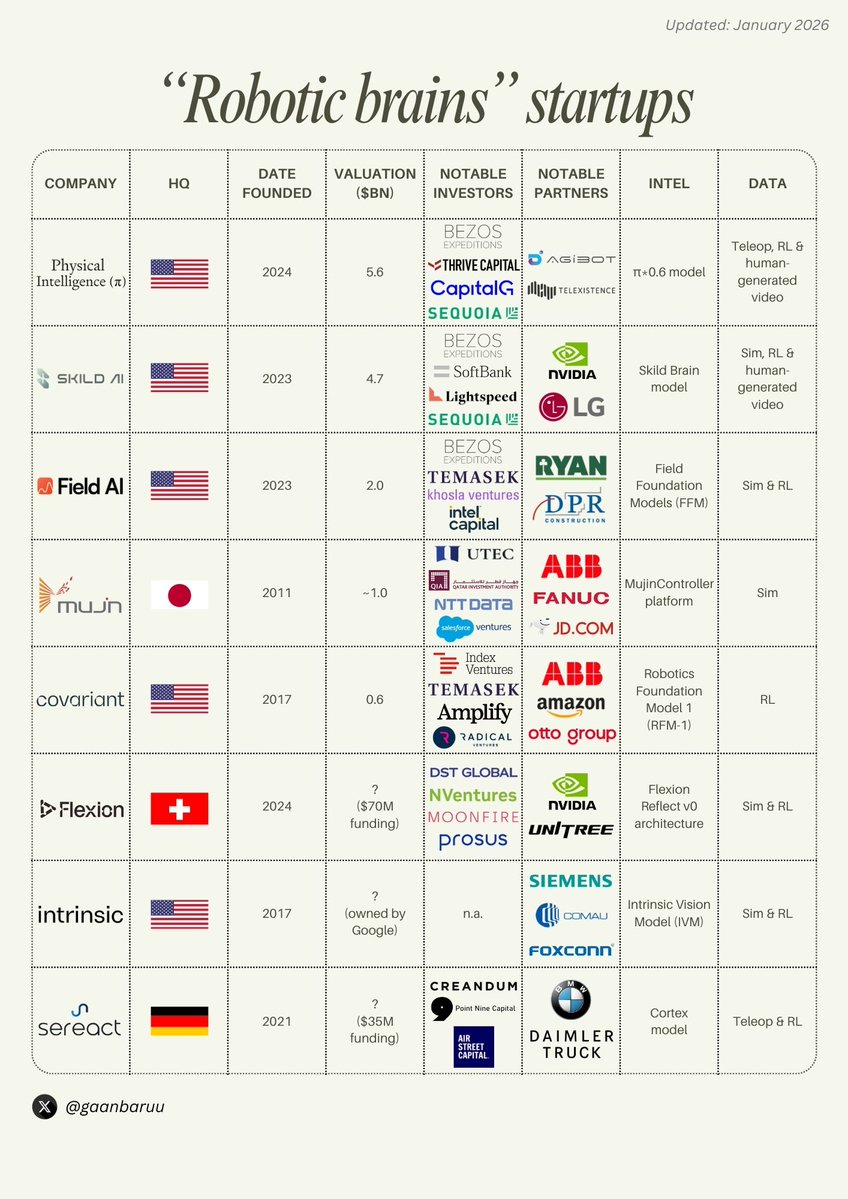

This graphic shows the $bn valuations of nascant “robotic brains” startups founded within the last few years:

Robotics is growing quickly, with massive future potential. The leading players in technology have either already entered the space, have majorly invested, or will do in the near future. Jeff Bezos has invested in three startups on this list, and another of them were acquired by Amazon.

Large-scale acquisitions are common in AI and robotics, especially when a company has novel technology or talent to accelerate their efforts. A few examples:

- 2026, Mobileye acquired Mentree Robotics for $900m

- 2025, Meta acquires Scale AI for $14bn

- 2025, Google acqui-hires Windsurf for $2.4bn

- 2025, Softbank acquires ABB robotics, a robotics arm company, for $1.2bn

- 2024, Amazon acqui-hires founders of AI robotics startup Covariant for $400m

The upside opportunities for i10e are a large acquisition for our intelligence technology, or we build into an OpenAI-level startup, where we sell intelligence for a billion robots.

Initial plans

Research

- Background research into Reinforcement Learning and SOTA methods

- Build from an RL foundation and explore ideas around continuous learning, non-linear architectures, genetic mutation, and so on.

- Demonstrate an ability to “understand the world, adapt existing knowledge, and learn from experience” at a basic level, matching the intelligence level of a mouse

Networking

- Release research and progress updates, with marketing demos to build our profile and attract future talent, investment, partners etc.

- Continuing to meet founders and teams around the world, attending events, building connections within the intelligence and robotics space

- Build a founding team around me. In addition to researchers, this would in particular be product, marketing, commercial and operations people. Here are the profiles of several people I’m actively considering:

- Research Eng, prev at autonomous driving co in SF, recently moved back to Europe

- VP of Corporate Strategy at robotics company, commercially focussed

- Sales + Growth Strategist in aerospace/defense, moving into robotics

Many research labs are offshoots from academia and don’t show seriousness in commercialisation or growth. In contrast, although I’m passionate about research, I’ve previously ran a startup which signed many commercial deals up to 7-figures ARR.

I am raising a small F+F round, around £250k, which gives up to 18 months of runway – enough time to support this initial research and then raising a larger round once it makes sense. I’m looking for my first backers, who are comfortable taking early bets.

If this early research is successful, i10e will take much larger investment in future to support fast growth.